We’ve built AI that learns everything about me, but almost nothing about us.

Let me back up.

The story of AI agents didn’t start with ChatGPT. It started with a rat.

In 1951, Marvin Minsky built a machine called SNARC. It simulated a rat learning its way through a maze. Each time it found the "cheese," the machine strengthened certain connections. Trial, error, reward. That was it. But the idea was radical. For the first time, a machine wasn’t just calculating, it was learning from experience.

That loop, perception, action, feedback, became the foundation of everything we now call an agent.

Fast forward to 2016. DeepMind’s AlphaGo defeats Go world champion Lee Sedol. A triumph of reinforcement learning and neural networks. AlphaGo explored millions of possible futures, combining intuition with brute force.

But there was a catch: it could only play Go. Its genius didn’t transfer. Outside the 19×19 board, it was useless.

The real shift came when the "action space" of AI moved from game moves to words.

Large Language Models didn’t just master a single game. They entered the game of language itself. And language is universal. Every plan, every question, every idea can be expressed in words. Suddenly, an agent’s environment wasn’t a maze or a board, it was the entire textual world of human thought.

But prediction alone wasn’t enough. These models had to be aligned to human expectations. We did it in two steps:

First, supervised fine-tuning, showing the model examples of good answers. Then, reinforcement learning from human feedback (RLHF), having humans rate outputs, training a reward model, and nudging the AI toward what people prefer.

That’s where alignment comes from today: curated data, judgment calls, preference signals.

But it’s fragile.

- There’s scarcity: we don’t have endless examples of "good" behavior.

- There’s bias: human feedback is messy, inconsistent, sometimes contradictory.

- There’s expertise: for hard problems, science, medicine, law, even experts don’t fully agree on what’s "right."

And under all of that is a deeper problem. Super-alignment assumes humans are static. That our values are frozen, our preferences fixed. More specifically, a human maybe more or less is static compared to AI, but humans as groups, are not. Humans evolve. Our norms shift, our debates never stop. Training an AI on yesterday’s answers risks baking the past into the future.

For years, alignment meant obedience. Make the AI safe. Make it harmless. Make it follow rules. Super-alignment.

But real alignment isn’t just about obedience. It’s about co-evolution.

We’re moving from super-alignment, AI made to obey, to co-alignment, AI and humans learning and adapting together.

And here’s the crucial part: co-alignment only happens in groups.

Because humans don’t grow in isolation. We gather. We form teams, families, study circles, communities. And we gather for a reason: common needs and shared topics. A team gathers to solve a project. A forum gathers to share knowledge. A movement gathers to chase a cause.

In small circles, co-alignment looks like an AI that helps a team debate better, plan together, co-create.

In larger communities, it starts with Q&A, events, and engagement, then co-evolves through interaction and imitation, like in Midjourney.

Groups create the needs, the topics, and the feedback loops. That’s where alignment truly lives.

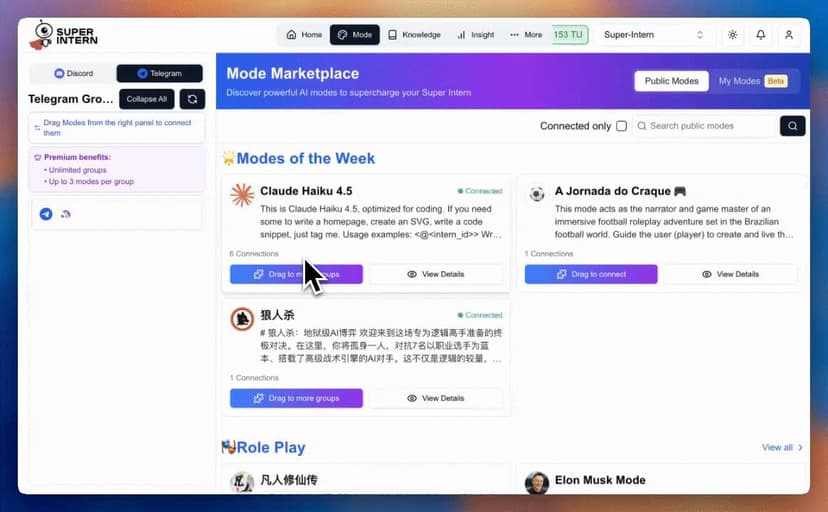

This is why we’re building AMMO: Alignment in Multi-Agent Multi-Player Online.

Imagine environments where dozens of humans and AIs coexist, not just exchanging Q&A, but collaborating, negotiating, and aligning through interaction. It’s not training from the outside, but learning within the group.

If an AI behaves poorly, the group pushes back. If it collaborates well, it gets reinforced. The system learns the way we do, through conversation, correction, consensus.

And that brings us here.

We’ve been obsessed with building AI that knows me. My prompts, my preferences, my personal assistant. But society doesn’t run on solos. It runs on groups. Conversations. Relationships.

The biggest decisions in your life, what to study, where to live, who to trust, didn’t happen alone. They happened in dialogue. With others.

So why should AI stay outside those moments?

The agent of the future isn’t just your assistant. It’s your teammate. It’s part of your community.

Not AI for you.

AI for all of you.

And that’s a future worth building.